Intro

Anyone remember Cleverbot? For a lot of us, this was our first intro to semi-intelligent autonomous chatting. Now I know the inner-workings behind it are nothing like an LLM - but it was an intriguing experience to engage with a non-human agent. That fascination persisted, and after I saw a few classmates playing with GPT-1 for their NLP class, I was hooked on the idea of making my own.

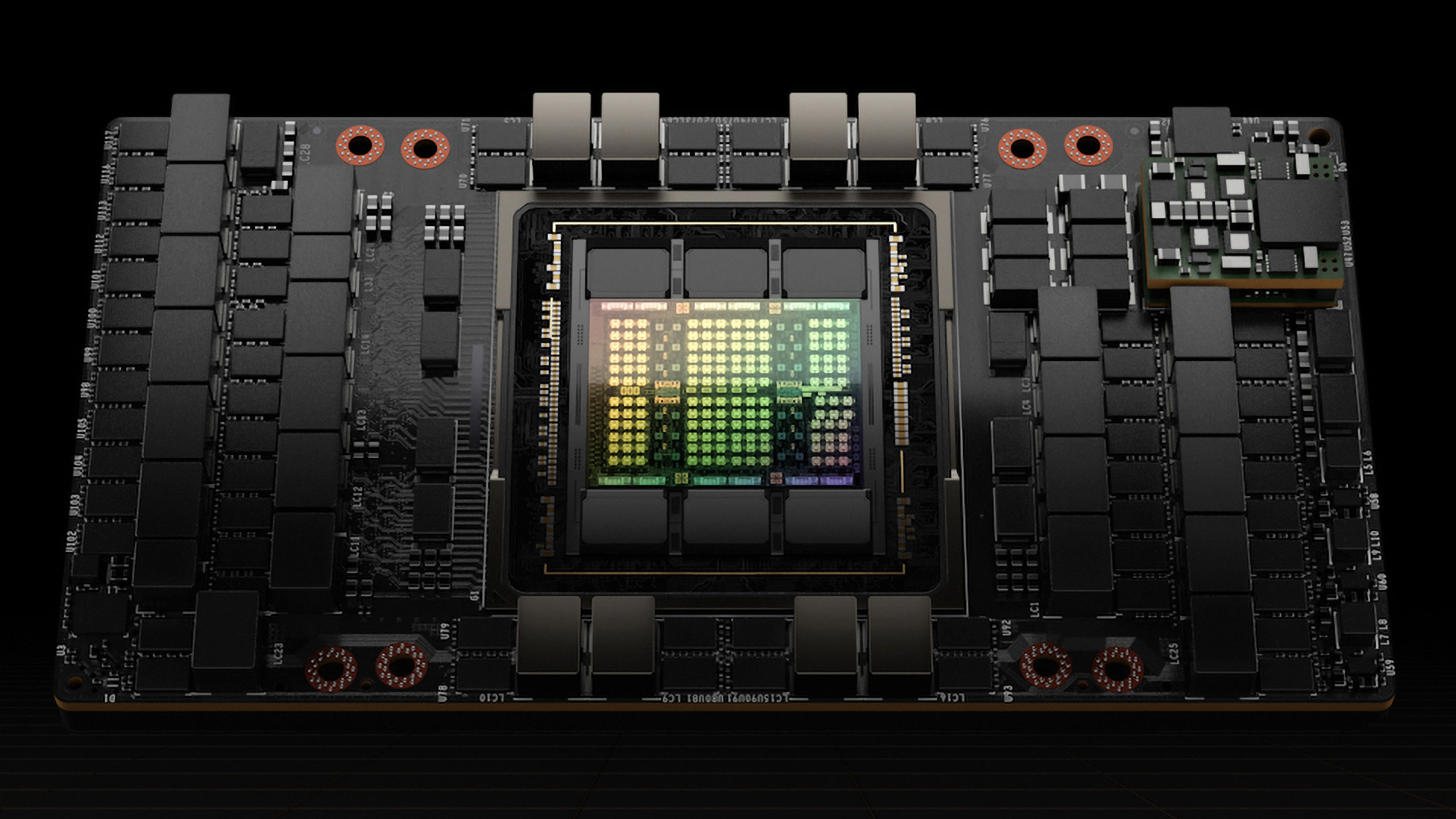

At this point, no novel idea of mine will have the compute behind it to make the next ChatGPT, but I'd like to have some fun along the way and make something of substance at the very least. Enter SLM - the Steinshark Language Model.

Phase 1 - Curate the Data

Data is a lucrative field these days. To be sure, there is plenty of free data out there. The Common Crawl is the entire internet - for free. They even cleaned up the html to give you the text underneath! But the problem with this data is noise. And there is plenty of noise on the internet... High quality data is what we're really searching for. And to train a decent model, we need a lot of it.

GPT-1 was trained on 4.7GB of data - around a billion words. That much text would take 11 years for the average Joe to read. Sounds like a lot - but GPT-1 was a toy model at best. GPT-2 upped the game to 40GB of text, this time from Reddit Posts. The first recognizably modern model, GPT-3, brought this up to 570GB, 300B tokens, or around 15,000,000 man-hours of reading.

With this type of scaling in mind, the search for a metric heck ton of data commenced. A balance of quantity and quality was needed, and so I hit the books (spoken the internet). So, how did I tackle this? Lets find out...

The Data

Free, big, fast, and clean is the "choose only 3" setup for data. At first, I left out fast. Weeks were spent downloading Common Crawl pages, adjusting my filter, finding something I missed, and reapeating. Eventually, I gave up on the autonomy and went crawling to FineWeb, a wonderful collection of highly curated English language web pages that did a better job than I ever could at filtering. For training I grabbed a 450GB subset of the 51.3TB available and got to work.

Copy-pasting a dataset just didn't fit the vibe of this project,so I went ahead and filtered it further. I took out adult, ad and spam content, filtered short articles, and tossed out anything with a language score of under .93 (fasttext classifier score). This chopped the data down to 343GB. Next, I curated a huge list of whitelist phrases, topics, and genres to include in the final dataset. This led to a massive reduction, around 19% of the first pass text. I sprinkled in some curated StackOverflow posts, Project Gutenberg selections, and YouTube Transcripts.

Finally, I added a massive chunk of Python code from The Stack dataset to massively improve coding skils in Python related tasks - and to later build a code tool to provide an execution environment for the model's response generation. And also - of course - a set of Wikipedia articles, which I selected based on historic access data such that only consistently visited pages were trained on.

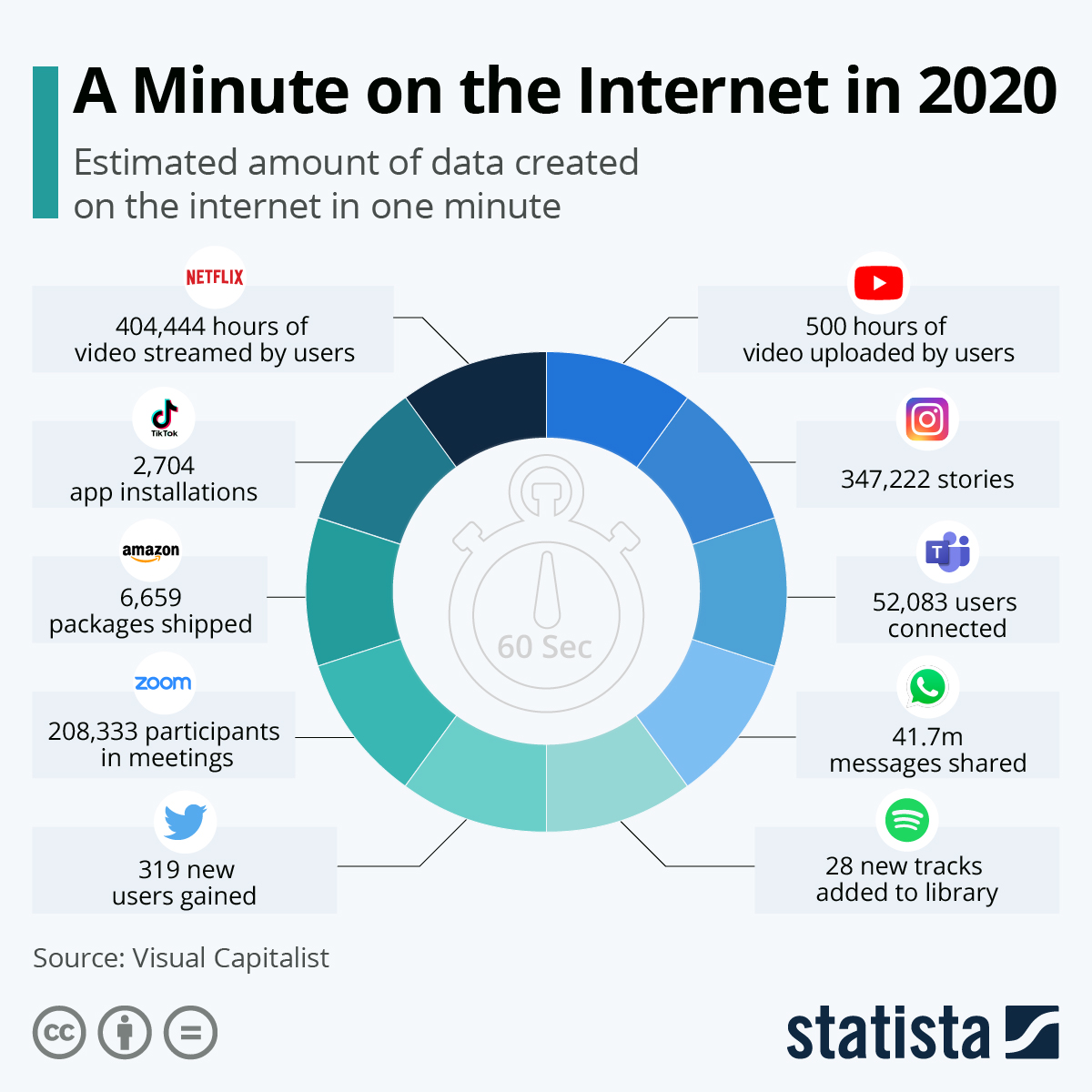

Over 500,000 GB of data is created online every minute.

The key here is that every bit of noise we take out programmatically is noise the model doesn't have to waste parameters on to filter out. Was the weeks-long data curation process worth it? Who knows! Lesson learned about pre-mature optimization, I guess.